Colliders performace

From Yade

Results

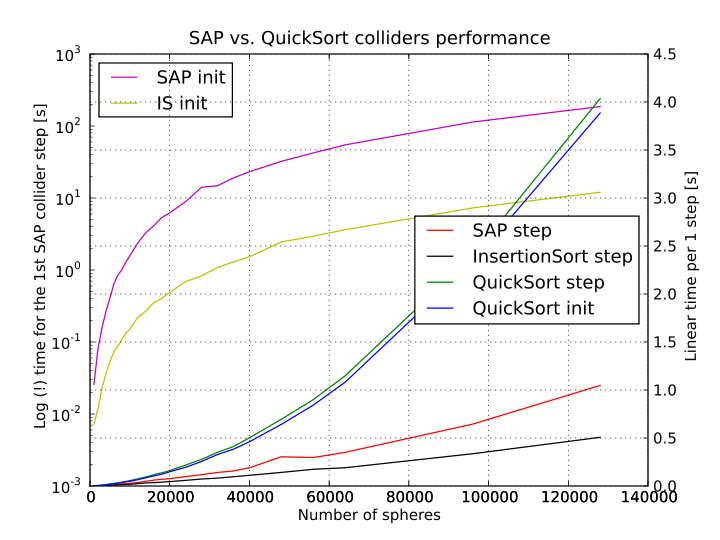

This graph shows

- "init": time for the first step

- "step": average time for next 100 steps (normalized per step)

- I had to put time for first step of PersistentSAPCollider to log scale.

- Machine: Intel i7 2.7GHz, DDR3 RAM

- SpatialQuickSortCollider scaled with N^2 and is (significantly) slower, especially for big packings; the initial step is not significantly longer that regular step.

- PersistentSAPCollider scales with something over N×log N. The first step is significantly slower than the next ones.

- InsertionSortCollider scales the same as PersistentSAPCollider, but in absolute numbers is about 50% faster in regular steps and over 10x (!!) faster on the initial step.

Running

$ cd examples/collider-perf $ export OMP_NUM_THREADS=1 # to make sure, for openMP-enabled builds $ yade-trunk-opt-multi perf.table perf.py $ python mkGraph.py *.log

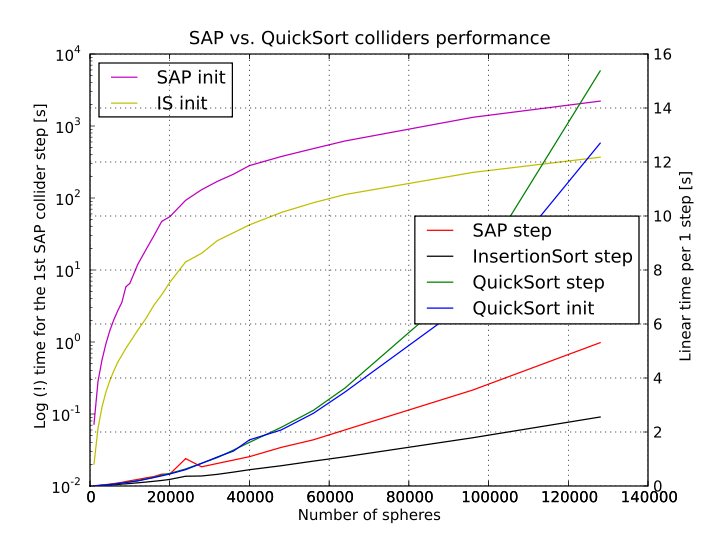

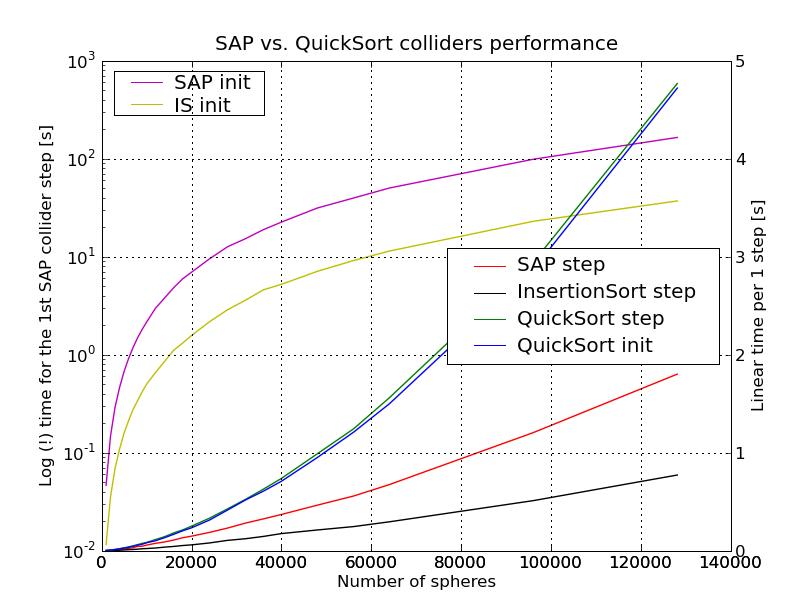

Other machines

- Machine: AMD Athlon(tm) XP 2100+, 1.7GHz

- Machine: Intel(R) Xeon(R) CPU E5410 @ 2.33GHz

TODO (post your graphs here, with machine description)

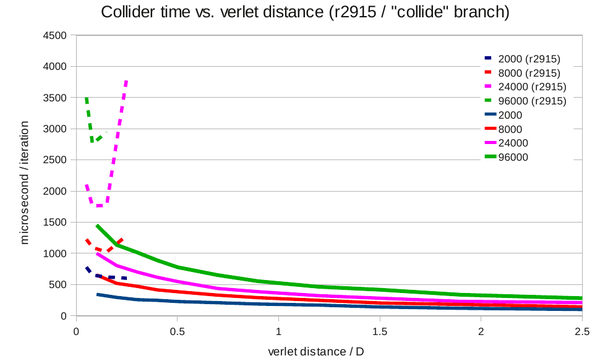

Improved InsertionSort

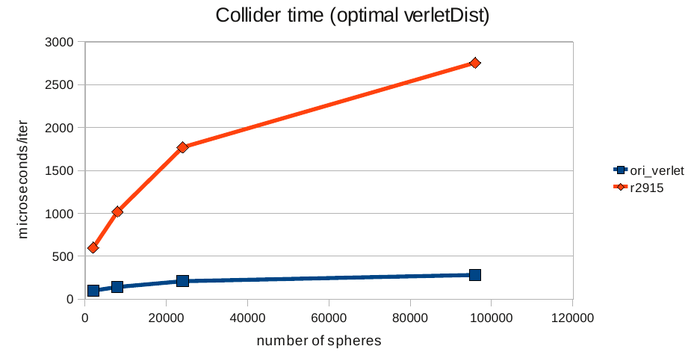

Results obtained with a modified version of the insertion sort collider are given below (5000 iterations in the initial phase of an isotropic confinement, single thread, Intel(R) Xeon(R) CPU W3530 @ 2.80GHz). The code is candidate for release (https://code.launchpad.net/~bruno-chareyre/yade/collide2).

As opposed to the results above, the times are given per simulation step, not per execution of the collider::action(). The speedup approaches x10 for 96k spheres. The speedup in terms of total time for one step is slightly higher than x2. It's observed that very large Verlet distance can be used, while the optimum is always near 0.07 for r2915).

The improvement is more sensible in multithread runs, since the modified version keeps the ratio of costs collider/interaction loop small. With 96k particles and the larger Verlet distance, the collider gives 16% of the total cpu time (initial step excluded).

Comparison on isotropic confinement

Comparison on scripts/test/performance/checkPerf.py (iter/sec)

1 thread

- bzr2915

5037 spheres, velocity= 110.359344232 +- 0.16947155531 %

25103 spheres, velocity= 27.4394736967 +- 1.47630822246 %

50250 spheres, velocity= 19.0980032815 +- 0.126775658881 %

100467 spheres, velocity= 9.22476120323 +- 0.174335620671 %

200813 spheres, velocity= 2.15555271381 +- 1.26679585962 %

- candidate code

5037 spheres, velocity= 139.354078689 +- 0.22421460436 %

25103 spheres, velocity= 34.2937046476 +- 1.06545521486 %

50250 spheres, velocity= 19.6416457779 +- 1.8690151127 %

100467 spheres, velocity= 9.66142407162 +- 1.00938117431 %

200813 spheres, velocity= 4.02108768995 +- 0.960493684787 %

3 threads

- bzr2915

5037 spheres, velocity= 204.067609161 +- 2.28664655815 %

25103 spheres, velocity= 38.4604068187 +- 0.911269441564 %

50250 spheres, velocity= 28.3963005702 +- 1.1619686394 %

100467 spheres, velocity= 13.308975108 +- 0.835973663701 %

200813 spheres, velocity= 2.43771034217 +- 0.446613836923 %

- candidate code, default verletDist (-0.9)

5037 spheres, velocity= 306.59232255 +- 2.66703100312 %

25103 spheres, velocity= 67.5531578312 +- 0.510164148894 %

50250 spheres, velocity= 36.9912080212 +- 1.06049975965 %

100467 spheres, velocity= 15.8309116988 +- 2.08518895749 %

200813 spheres, velocity= 5.1573687532 +- 1.13564637798 %

- candidate code, verletDist=-1.5